Logging in general is very useful for both software development and infrastructure management or security tasks. Often, when an installation is performed, the primary goal is to successfully run the installation, and architectural requirements are ignored as they are deemed unnecessary. Audit logging is one of the issues that is overlooked during cluster installation. However, if we are running our applications in a production environment, we must definitely use audit logging actively in the cluster. By default, Kubernetes installation does not include audit logging, and most people install it when they need it, or they never install it at all.

Why should we enable audit logging?

Audit logging provides a comprehensive overview of everything in the cluster and helps you notice problems and take appropriate action when they occur.

Logging the creation, updating, and deletion of cluster objects such as deployments, services, and config maps will be beneficial for retrospective analysis when a faulty situation arises.

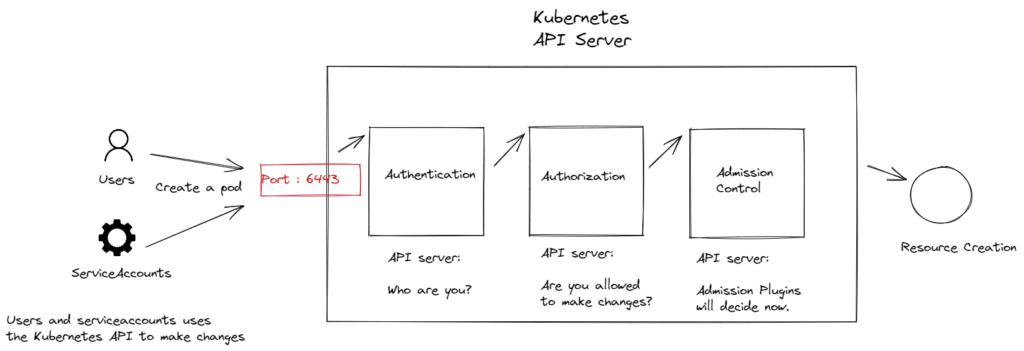

In addition, logging the operations and actions performed by a user in the cluster, who may be an attacker, enables you to be aware of situations that may pose a security risk. When a user wants to make changes in the cluster, an API request is sent to the API server, and the API server first verifies the relevant user and checks whether they have the authorization to perform the requested operation, and then performs the request. Logging these steps will be very helpful in controlling unauthorized operations and taking action when necessary.

Audit logs allow you to answer questions such as what happened?, when did it happen?, who initiated it?, etc.

How does audit logging work?

When a process is performed in a Kubernetes cluster, whether by a user or a service account, the request first goes to the API server. Requests to the API server can be made using kubectl, client libraries, or REST requests.

Audit Log is integrated into the Kubernetes API server to track every stage of incoming requests and log them.

How is Audit Policy configured in Kubernetes?

By default, Audit Policy is not enabled in the cluster. However, log records (events) are stored in the Kubernetes backend database, etcd, with an hourly interval. However, an hour may not be sufficient for a comprehensive analysis of the past and resolving issues that arise.

To activate Audit logging in the cluster, we need an Audit Policy file. The Policy file specifies which records will be kept and what they will contain.

There are four predefined log levels:

- None: don’t log events that match this rule

- Metadata: Log request metadata (requesting user, timestamp, resource, verb, etc.) but not request or response body

- Request: Log event metadata and request body

- RequestResponse: Log event metadata and response body

For example, if we want to log the audit logs of Kubernetes pods at the metadata level, and for secrets, we want to log them at the RequestResponse level, we can write an Audit Policy file as follows.

vi /etc/kubernetes/audit-policy.yaml

apiVersion: audit.k8s.io/v1 # This is required.

kind: Policy

# Don't generate audit events for all requests in RequestReceived stage.

omitStages:

- "RequestReceived"

rules:

# Log pod changes at Metadata level

- level: Metadata

resources:

- group: ""

resources: ["pods"]

# Log secret changes in all namespaces at the RequestResponse level.

- level: RequestResponse

resources:

- group: "" # core API group

resources: ["secrets"]After creating an audit Policy file on the Kubernetes control plane server, we can pass the file to the kube-api server with the — audit-policy-file flag.

vi /etc/kubernetes/manifests/kube-apiserver.yaml

We can paste the audit-policy-file parameter in the kube-apiserver.yaml file in the appropriate location.

— audit-policy-file=/etc/kubernetes/audit-policy.yaml

When referencing the Audit Policy file, we need to provide it as a volume mount for the API server pod to see the file we created.

volumeMounts:

- mountPath: /etc/kubernetes/audit-policy.yaml

name: audit

readOnly: truevolumes:

- name: audit

hostPath:

path: /etc/kubernetes/audit-policy.yaml

type: FileWe have decided which events will be logged in the Audit Policy file, but we haven’t specified in the API server configuration where the logs will be written to and for how long they will be kept. We can specify the location of the event logs with the audit-log-path flag. If we don’t specify the audit-log-path flag, the logs will be written to stdout. For example, the following flag will store the log records in the /var/log/kubernetes/audit/audit.log file.

— audit-log-path=/var/log/kubernetes/audit/audit.log

Additionally, we can use the following API server parameters to specify how long the logs will be kept on the server, as well as the size and number of log files.

— audit-log-maxage=10

— audit-log-maxbackup=5

We also need to mount the directory where the logs will be stored in the Kubernetes API server pod’s server directory so that it can see it.

volumeMounts:

- mountPath: /var/log/kubernetes/audit/

name: audit-log

readOnly: falsevolumes:

- name: audit-log

hostPath:

path: /var/log/kubernetes/audit/

type: DirectoryOrCreateThat’s all you need to do to activate Audit Log in Kubernetes. After making changes to the Kubernetes API server manifest file, the API server will restart and audit logging will be enabled.

Let’s create a secret with the kubectl command in the cluster and check the audit logs.

kubectl create secret generic creds — from-literal=username=user — from-literal=password=password

After creating a secret like the one above in the Kubernetes cluster on the control plane server, a log will be created indicating that a secret has been created in the /var/log/kubernetes/audit/audit.log file. We can see the resulting log file as follows.

sudo cat /var/log/kubernetes/audit/audit.log | grep secret | grep -i creds

{

"kind":"Event",

"apiVersion":"audit.k8s.io/v1",

"level":"RequestResponse",

"auditID":"d3d4af88-f76f-4da6-9786-d443680ee966",

"stage":"ResponseComplete",

"requestURI":"/api/v1/namespaces/default/secrets?fieldManager=kubectl-create\u0026fieldValidation=Strict",

"verb":"create",

"user":{

"username":"kubernetes-admin",

"groups":["system:masters","system:authenticated"]

},

"sourceIPs":["172.31.123.178"],

"userAgent":"kubectl/v1.24.0 (linux/amd64) kubernetes/4ce5a89",

"objectRef":{"resource":"secrets","namespace":"default","name":"creds","apiVersion":"v1"},

"responseStatus":{"metadata":{},"code":201},

"requestObject":{

"kind":"Secret",

"apiVersion":"v1",

"metadata":{"name":"creds","creationTimestamp":null},

"data":{"password":"cGFzc3dvcmQ=","username":"dXNlcg=="},

"type":"Opaque"},

"responseObject":{

"kind":"Secret",

"apiVersion":"v1",

"metadata":{

"name":"creds",

"namespace":"default",

"uid":"e2402082-440f-4329-bedf-5fa7e2a125d7",

"resourceVersion":"1918",

"creationTimestamp":"2023-03-26T13:15:02Z",

"managedFields":[{

"manager":"kubectl-create",

"operation":"Update",

"apiVersion":"v1",

"time":"2023-03-26T13:15:02Z",

"fieldsType":"FieldsV1","fieldsV1":{

"f:data":{".":{},"f:password":{},"f:username":{}},"f:type":{}}}]},

"data":{"password":"cGFzc3dvcmQ=","username":"dXNlcg=="},

"type":"Opaque"},

"requestReceivedTimestamp":"2023-03-26T13:15:02.539459Z",

"stageTimestamp":"2023-03-26T13:15:02.544608Z",

"annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":""}}As you can see in the resulting event log, there is detailed information about who created the secret, when it was created, and the contents of the secret. You should keep sensitive information at the Metadata level. If you log a secret at the RequestResponse level, as we did in the example, the secret contents will appear in the audit logs.